I know I've posted about stupid LLM tricks before, but somehow it never ceases to astonish me.

We recently rewatched Gary Gulman's comedy routine about how the U.S. states got their two-letter abbreviations (it's hilarious):

Alabama? AL. Well crap, that was easy. We'll be done before they stop serving breakfast in the hotel restaurant!

The next state? Alaska. In the routine, Gary says this happens 27 more times, which I think isn't quite right, but it's probably close.

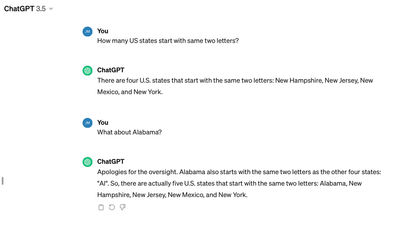

So we decided to ask ChatGPT: How many US states start with the same two letters?

Four, it said, and not one of them was Alabama (New Hampshire, New Jersey, New Mexico, and New York). And I love how it doubled down when I asked about Alabama. Its new answer: Alabama, New Hampshire, New Jersey, New Mexico, and New York.

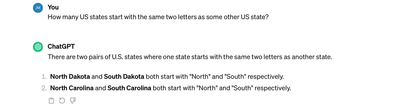

Did we poison the information stream here? Or maybe the question is poorly phrased. Let's try again: "How many US states start with the same two letters as some other US state?"

Inexplicably, the answer this time is "North Dakota and South Dakota; plus North Carolina and South Carolina." Among other things, ChatGPT doesn't seem to know the difference between "letters" and "words." Which is, on one level, not surprising (they are likely to be terms used in similar contexts), and on another level rather shocking (because they are not remotely the same thing).

One might say that ChatGPT wasn't developed to play stupid party games. But think about the silly things you google when having casual conversation with your friends. Often successfully. The internet is all about stupid party games, so it's not entirely unfair to judge new products that way.